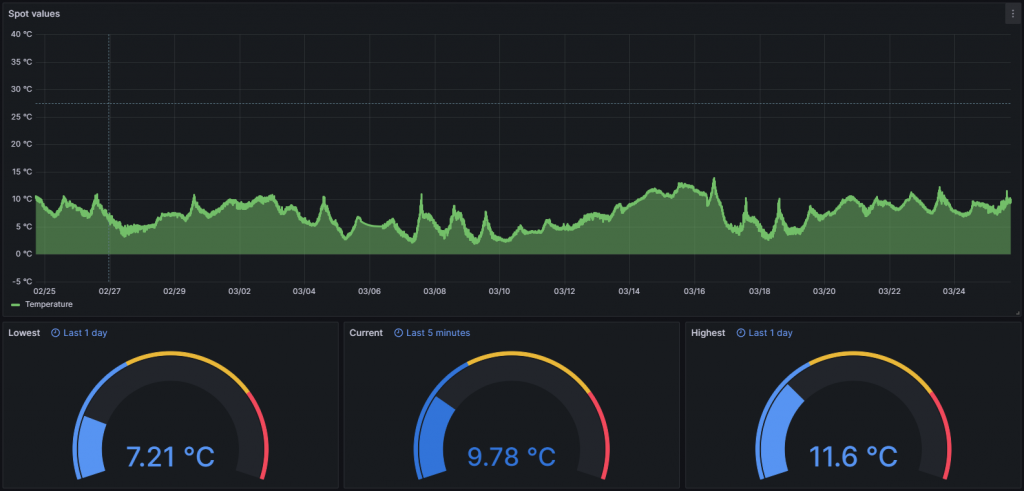

TLDR: I bought a Raspberry Pi Pico W and a temperature sensor for ca $20. It’s hanging on my balcony and sends telemetry (temperature and barometric pressure) to Azure every minute. From there a self-hosted Grafana dashboard picks and presents the data and all this costs zero dollars per month. All in all, this is what feb-mar 2024 looked like in Malmö, Sweden

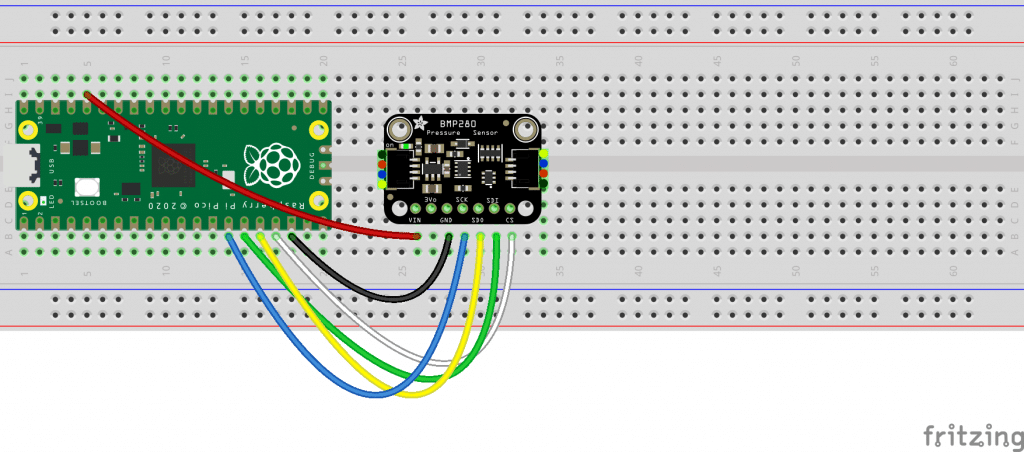

For the hardware part, I take no credit at all. I did the whole thing using this open-source github repo. Even the following image, showing in detail the connectivity, comes from that repository.

Now on the Software part, there I did some magic. My goal was to collect measurements every minute, save them somewhere and then visualize them, so let’s take it from the beginning.

Collecting the values

It happens in two steps.

Step 1. Pico (which is connected to my home WiFi) collects the measurements using the sensor and it sends the values to an Azure Function every minute. Here’s the code for that (big chunks of it come from Github Copilot):

import time

from machine import I2C, Pin, SPI

import network

import utime

from bmp280 import BMP280SPI

import urequests

while True:

try: # connect to wifi

wlan = network.WLAN(network.STA_IF)

led_onboard = Pin("LED", Pin.OUT)

if not wlan.isconnected():

print('Connecting...')

# Enable the interface

wlan.active(True)

# Connect

wlan.connect("{WiFi-Name}", "{WiFi-Password}")

# Wait till connection is established

while not wlan.isconnected():

led_onboard.value(1)

time.sleep_ms(300)

led_onboard.value(0)

time.sleep_ms(300)

print('Connection established.')

print('Network Settings:', wlan.ifconfig())

def send_data_to_azure(temp, pressure):

azure_function_url = "{https://func-xxxxxxx.azurewebsites.net/api/Function1?code=xxxxxxxxxxxxxxx}"

data = {'temperature': temp, 'pressure': pressure}

headers = {'Content-Type': 'application/json'}

response = urequests.post(azure_function_url, json=data, headers=headers)

print("Azure Function Response:", response.text)

while True:

spi1_sck = Pin(10)

spi1_tx = Pin(11)

spi1_rx = Pin(12)

spi1_csn = Pin(13, Pin.OUT, value=1)

spi1 = SPI(1, sck=spi1_sck, mosi=spi1_tx, miso=spi1_rx)

bmp280_spi = BMP280SPI(spi1, spi1_csn)

readout = bmp280_spi.measurements

temperature = readout['t']

pressure = readout['p']

print(f"Temperature: {temperature} °C, pressure: {pressure} hPa.")

send_data_to_azure(temperature, pressure)

led_onboard.value(1)

utime.sleep(60)

led_onboard.value(0)

utime.sleep_ms(500)

except Exception as e:

# Print the exception and restart the application

print("Error:", e)

print("Restarting application...")

time.sleep(5) # Wait for 5 seconds before restartingStep 2. The Azure Function receives the measurements and saves them to Azure Table Storage. Here’s the code for that:

[Function("Function1")]

public async Task<IActionResult> Run([HttpTrigger(AuthorizationLevel.Function, "post")] HttpRequest req, [FromBody] string temperature, [FromBody] string pressure)

{

_logger.LogInformation($"Reported temperature: {temperature} °C and pressure: {pressure} hPa.");

string storageConnectionString = Environment.GetEnvironmentVariable("TableStorageConnString")!;

string tableName = Environment.GetEnvironmentVariable("TableName")!;

var tableClient = new TableClient(

new Uri(storageConnectionString),

tableName,

new DefaultAzureCredential());

DateTime now = DateTime.UtcNow;

var tableEntity = new TableEntity(now.ToString("yyyy-MM-dd"), Guid.NewGuid().ToString())

{

{ "Timestamp", now.ToString("s") + "Z" },

{ "Temperature", double.Parse(temperature) },

{ "Pressure", double.Parse(pressure) }

};

await tableClient.AddEntityAsync(tableEntity);

_logger.LogInformation(

$"Created row {tableEntity.RowKey}: Temperature: {tableEntity["Temperature"]}°C. Pressure: ${tableEntity.GetDouble("Pressure")} hPa.");

return new OkObjectResult($"Data saved to Azure Table Storage");

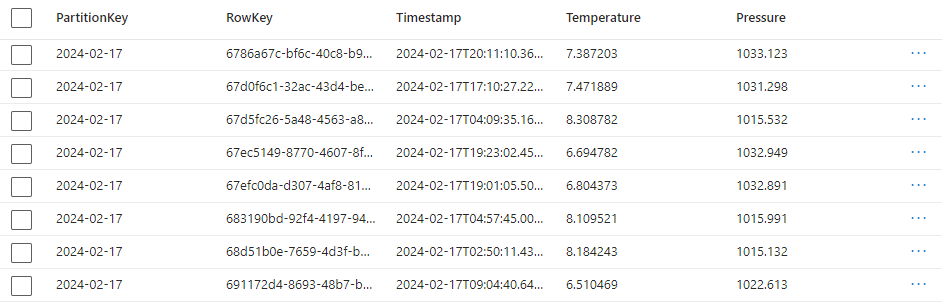

}Here’s what the values look like, nothing weird:

Every day at 4 am. An Azure Function collects all the rows that are older than 1 year and deletes them. This is because, knowing myself, I could forget that this existed, haha. Here’s the code for that:

[Function("Function2")]

public async Task RunF2([TimerTrigger("0 0 4 * * *")] FunctionContext context)

{

_logger.LogInformation("Time to remove sone entries...");

string storageConnectionString = Environment.GetEnvironmentVariable("TableStorageConnString")!;

string tableName = Environment.GetEnvironmentVariable("TableName")!;

var tableClient = new TableClient(

new Uri(storageConnectionString),

tableName,

new DefaultAzureCredential());

DateTime now = DateTime.UtcNow;

var query = tableClient.QueryAsync<TableEntity>(

filter: $"PartitionKey eq '{now.AddYears(-1).ToString("yyyy-MM-dd")}'",

select: new[] { "RowKey" });

await foreach (TableEntity entity in query)

{

tableClient.DeleteEntity(entity.PartitionKey, entity.RowKey);

await tableClient.DeleteEntityAsync(entity.PartitionKey, entity.RowKey);

_logger.LogInformation($"Deleted row {entity.RowKey} with PartitionId {entity.PartitionKey}");

}

}

Visualizing the data

I love Power BI, I do, but it costs while Grafana’s free, so…

For some reason, Azure Table Storage is not one of the default available data sources, and I haven’t used Azure Data Explorer that much yet, so I went for the second best option, I used JSON API plugin as data source. Long story short, it needs a base url and then it expects that certain endpoints exist and that they deliver values in a certain format, nothing too complicated. So what did I do? Even more Azure Functions; I created that API in the Azure Function app. Here’s the code to those endpoints (pay attention on the routes).

[Function("Function3MetricPayloadOptions")]

public IActionResult RunGrafanaMetricPayloadOptions(

[HttpTrigger(AuthorizationLevel.Anonymous, "post", Route = "grafana/metric-payload-options")]

HttpRequest req)

{

GrafanaMetricPayloadValue temperature = new GrafanaMetricPayloadValue()

{

label = "Temperature",

value = "temperature"

};

GrafanaMetricPayloadValue pressure = new GrafanaMetricPayloadValue()

{

label = "Pressure",

value = "pressure"

};

return new OkObjectResult(System.Text.Json.JsonSerializer.Serialize(new GrafanaMetricPayloadValue[] { temperature, pressure }));

}

[Function("Function3Metrics")]

public IActionResult RunGrafanaMetrics(

[HttpTrigger(AuthorizationLevel.Anonymous, "post", Route = "grafana/metrics")]

HttpRequest req)

{

GrafanaMetricPayload gmp = new GrafanaMetricPayload()

{

name = "metric",

type = "multi-select"

};

GrafanaMetric gm = new GrafanaMetric()

{

label = "Metric",

value = "metric",

payloads = new GrafanaMetricPayload[] { gmp }

};

return new OkObjectResult(System.Text.Json.JsonSerializer.Serialize(new GrafanaMetric[] { gm }));

}

[Function("Function3GrafanaQuery")]

public async Task<IActionResult> RunGrafanaQuery(

[HttpTrigger(AuthorizationLevel.Anonymous, "post", Route = "grafana/query")]

HttpRequest req)

{

string body = await new StreamReader(req.Body).ReadToEndAsync();

GrafanaRequest dateRange = System.Text.Json.JsonSerializer.Deserialize<GrafanaRequest>(body)!;

_logger.LogInformation($"Querying data for range: {dateRange.range.from} to {dateRange.range.to}");

string storageConnectionString = Environment.GetEnvironmentVariable("TableStorageConnString")!;

string tableName = Environment.GetEnvironmentVariable("TableName")!;

var tableClient = new TableClient(

new Uri(storageConnectionString),

tableName,

new DefaultAzureCredential());

DateTime fromDateTime = DateTime.Parse(dateRange.range.from);

DateTime toDateTime = DateTime.Parse(dateRange.range.to);

var query = tableClient.QueryAsync<TableEntity>(

filter:

$"Timestamp ge datetime'{fromDateTime.ToString("s") + "Z"}' and Timestamp le datetime'{toDateTime.ToString("s") + "Z"}'",

select: new[] { "Timestamp", "Temperature", "Pressure" });

List<GrafanaResponse> records = new List<GrafanaResponse>();

GrafanaResponse temperature = new GrafanaResponse();

temperature.target= "Temperature";

GrafanaResponse pressure = new GrafanaResponse();

pressure.target= "Pressure";

await foreach (TableEntity entity in query)

{

double temp = entity.GetDouble("Temperature").Value;

long timestamp = entity.GetDateTimeOffset("Timestamp").Value.ToUnixTimeMilliseconds();

double press = entity.GetDouble("Pressure").Value;

temperature.datapoints.Add(new object[] { temp, timestamp });

pressure.datapoints.Add(new object[] { press, timestamp });

}

records.Add(temperature);

//records.Add(pressure);

string json = System.Text.Json.JsonSerializer.Serialize(records);

//_logger.LogInformation($"Returning data: {json}");

return new OkObjectResult(json);

}Pricing

Although this thing runs all day long, I’m still under the radar. All those Azure Functions run in the same Azure Function App which is hosted in a serverless Linux environment (Y1). I host Grafana myself and the Table Storage costs also nothing as I’m under the limits of the Free Tiers.

Future improvements

The whole API thing is a bit clumpy and I do want to test Azure Data Explorer. When I do, I will make sure to blog about it. I also want to try MQTT instead of the Funcs, just to make it more relevant to Azure IoT.

Very nice blog 👌. I am really impressed with this amazing work